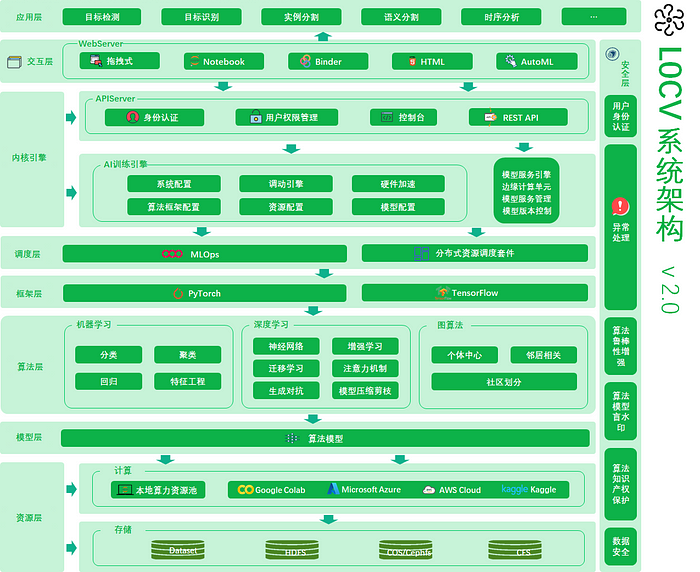

An easy-to-go tool-chain for comuter vision with MLOps, AutoML and Data Security

L0CV is a new generation of computer vision open source online learning media, a cross-platform interactive learning framework integrating graphics, source code and HTML. the L0CV ecosystem — Notebook, Datasets, Source Code, and from Diving-in to Advanced — as well as the L0CV Hub.

🌍 English | 简体中文| 日本語 | Українською

Easy-to-go tool-chain for computer vision with MLOps, AutoML and Data Security

Quickstart • Notebook • Community • Docs

Note: Please raise an issue for any suggestions, corrections, and feedback.

The goal of this repo is to build an easy-to-go computer vision tool-chain to realise the basics of MLOps like model building, monitoring, configurations, testing, packaging, deployment, cicd, etc.

Features

- 📕 Summary

- 🍃 Data & Models Version Control — DVC

- ⛳ Model Packaging — ONNX

- 🐾 Model Packaging — Docker

- 🍀 CI/CD — GitHub Actions

- ⭐️ Serverless Deployment — AWS Lambda

- 🌴 Container Registry — AWS ECR

- ⏳ Prediction Monitoring — Kibana

📘 Summary

All Rights Received ©Charmve

版权所有,🈲️ 止商用

🍃 Data & Models Version Control — DVC

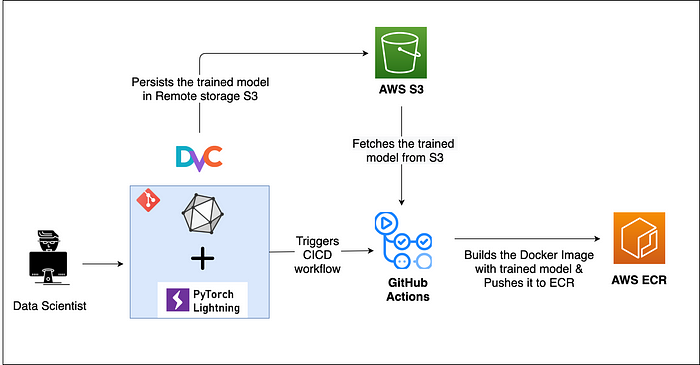

DVC usually runs along with Git. Git is used as usual to store and version code (including DVC meta-files). DVC helps to store data and model files seamlessly out of Git, while preserving almost the same user experience as if they were stored in Git itself. To store and share the data cache, DVC supports multiple remotes — any cloud (S3, Azure, Google Cloud, etc) or any on-premise network storage (via SSH, for example).

The DVC pipelines (computational graph) feature connects code and data together. It is possible to explicitly specify all steps required to produce a model: input dependencies including data, commands to run, and output information to be saved. See the quick start section below or the Get Started tutorial to learn more.

- reference — https://github.com/iterative/dvc

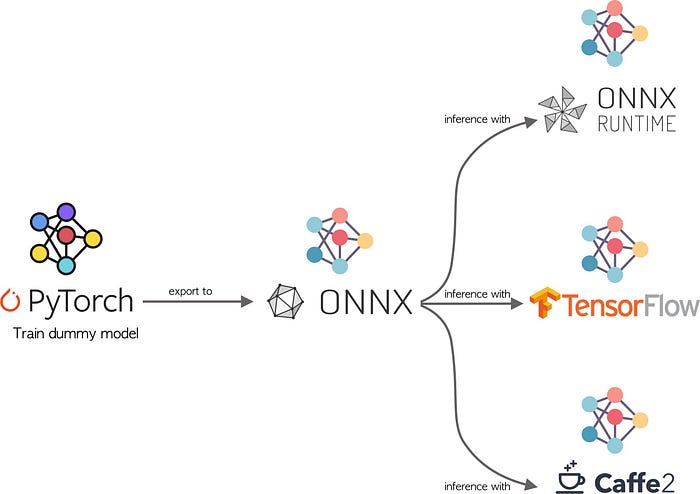

⛳ Model Packaging — ONNX

Why do we need model packaging? Models can be built using any machine learning framework available out there (sklearn, tensorflow, pytorch, etc.). We might want to deploy models in different environments like (mobile, web, raspberry pi) or want to run in a different framework (trained in pytorch, inference in tensorflow). A common file format to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers will help a lot.

This is acheived by a community project ONNX.

Following tech stack is used:

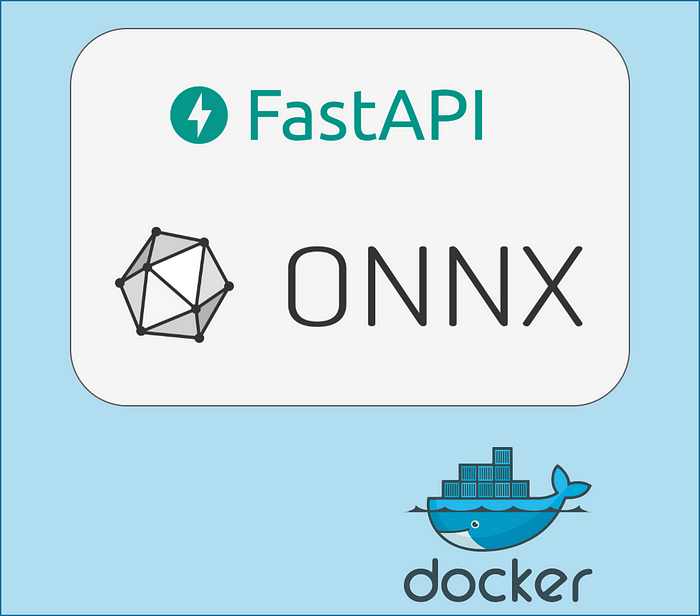

🐾 Model Packaging — Docker

Why do we need packaging? We might have to share our application with others, and when they try to run the application most of the time it doesn’t run due to dependencies issues / OS related issues and for that, we say (famous quote across engineers) that It works on my laptop/system.

So for others to run the applications they have to set up the same environment as it was run on the host side which means a lot of manual configuration and installation of components.

The solution to these limitations is a technology called Containers.

By containerizing/packaging the application, we can run the application on any cloud platform to get advantages of managed services and autoscaling and reliability, and many more.

The most prominent tool to do the packaging of application is Docker 🛳

🍀 CI/CD — GitHub Actions

Refer to the Blog Post here

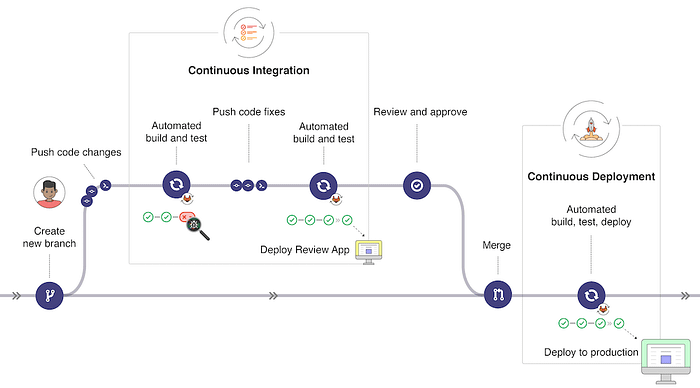

CI/CD is a coding philosophy and set of practices with which you can continuously build, test, and deploy iterative code changes.

This iterative process helps reduce the chance that you develop new code based on a buggy or failed previous versions. With this method, you strive to have less human intervention or even no intervention at all, from the development of new code until its deployment.

🌴 Container Registry — AWS ECR

A container registry is a place to store container images. A container image is a file comprised of multiple layers which can execute applications in a single instance. Hosting all the images in one stored location allows users to commit, identify and pull images when needed.

Amazon Simple Storage Service (S3) is a storage for the internet. It is designed for large-capacity, low-cost storage provision across multiple geographical regions.

⭐️ Serverless Deployment — AWS Lambda

A serverless architecture is a way to build and run applications and services without having to manage infrastructure. The application still runs on servers, but all the server management is done by third party service (AWS). We no longer have to provision, scale, and maintain servers to run the applications. By using a serverless architecture, developers can focus on their core product instead of worrying about managing and operating servers or runtimes, either in the cloud or on-premises.

⏳ Prediction Monitoring — Kibana

Monitoring systems can help give us confidence that our systems are running smoothly and, in the event of a system failure, can quickly provide appropriate context when diagnosing the root cause.

Things we want to monitor during and training and inference are different. During training we are concered about whether the loss is decreasing or not, whether the model is overfitting, etc.

But, during inference, We like to have confidence that our model is making correct predictions.

Code with ❤️ & ☕️